环境准备

硬件环境

操作系统:Centos Stream 9

CPU:4颗

内存:8GB

硬盘:50GB

软件环境

docker版本:26.1.0

docker compose版本:v2.26.1

部署过程 准备基础环境,安装Docker 1、安装 yum-utils 软件包(提供 yum-config-manager 实用程序)并设置存储库,命令如下。

1 2 3 4 # 安装 yum-utils 软件包 yum install -y yum-utils # 设置docker-ce存储库 yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

2、安装最新版docker及其组件,命令如下。

1 yum install -y docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

3、启动Docker服务,并设置为开机自启动,命令如下。

1 2 3 4 5 6 # 启动Docker服务 systemctl start docker # 设置Docker为开机自启动 systemctl enable docker # 查看Docker服务状态 systemctl status docker

4、修改Docker的数据目录为“/data/dockerData”,并重启docker服务使配置生效。

1 2 3 4 5 6 7 8 9 10 11 # 创建Docker守护进程配置文件 # 编辑配置文件/etc/docker/daemon.json,配置docker数据目录 vi /etc/docker/daemon.json **************************daemon.json************************** { "data-root": "/data/dockerData" } **************************daemon.json************************** # 重启docker服务,使配置生效 systemctl restart docker

5、设置与内存映射相关的内核参数为262144,查看应用到系统的内核参数。

1 2 echo "vm.max_map_count=262144" >> /etc/sysctl.conf sysctl -p

6、配置防火墙策略

1 2 3 4 5 6 firewall-cmd --add-port=9200/tcp --permanent firewall-cmd --add-port=80/tcp --permanent firewall-cmd --add-port=443/tcp --permanent firewall-cmd --add-port=5601/tcp --permanent firewall-cmd --reload firewall-cmd --list-all

部署elk集群 1、创建构建容器所需要的文件目录,并赋予相应的访问权限。

1 2 3 4 cd /data mkdir es-node1 es-node2 es-node3 elk-kibana chmod g+rwx es-node1 es-node2 es-node3 elk-kibana chgrp 0 es-node1 es-node2 es-node3 elk-kibana

2、在/data/script 目录下,创建并编辑 .env 文件,用来配置elk的环境变量。

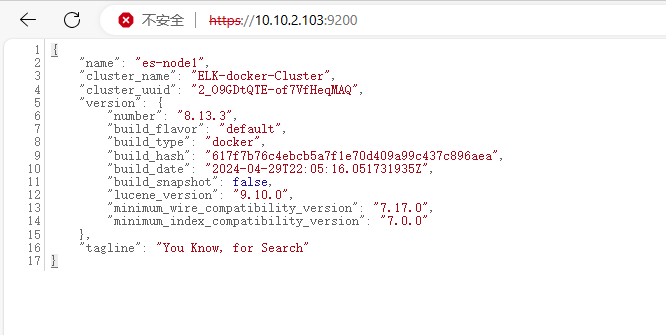

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 mkdir /data/script vi /data/script/.env **************************.env************************** # Elasticsearch的密码 ELASTIC_PASSWORD=elk#bd@123 # Kibana的密码 KIBANA_PASSWORD=elk#bd@123 # Elastic Stack的版本号 STACK_VERSION=8.13.3 # Elastic Stack的集群名称 CLUSTER_NAME=ELK-docker-Cluster # 指定Elastic Stack的许可证类型 LICENSE=basic # 指定Elasticsearch的端口号 ES_PORT=9200 # 指定Kibana的端口号 KIBANA_PORT=5601 # 指定内存限制 MEM_LIMIT=2147483648 # 指定Docker Compose项目的名称 COMPOSE_PROJECT_NAME=elk-docker-project **************************.env**************************

3、创建logstash容器所需的目录和配置文件。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 mkdir -p logstash/config logstash/pipeline echo 'http.host: "0.0.0.0"' > /data/logstash/config/logstash.yml vi /data/logstash/pipeline/logstash.conf **************************logstash.conf************************** input { beats { port => 5044 } } output { elasticsearch { hosts => ["https://es-node1:9200"] index => "%{[@metadata][beat]}-%{[@metadata][version]}-%{+YYYY.MM.dd}" user => "elastic" password => "elk#bd@123" cacert=> "/usr/share/logstash/config/certs/ca/ca.crt" } } **************************logstash.conf**************************

4、创建docker compose文件,编排创建es初始化节点、es三节点、Kibana、logstash容器。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 vi /data/script/elk.yml **************************elk.yml************************** services: es-setup: image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION} container_name: es-setup volumes: - /data/elk-certs:/usr/share/elasticsearch/config/certs user: "0" networks: net: ipv4_address: 172.20.100.10 command: > bash -c ' if [ x${ELASTIC_PASSWORD} == x ]; then echo "Set the ELASTIC_PASSWORD environment variable in the .env file"; exit 1; elif [ x${KIBANA_PASSWORD} == x ]; then echo "Set the KIBANA_PASSWORD environment variable in the .env file"; exit 1; fi; if [ ! -f config/certs/ca.zip ]; then echo "Creating CA"; bin/elasticsearch-certutil ca --silent --pem -out config/certs/ca.zip; unzip config/certs/ca.zip -d config/certs; fi; if [ ! -f config/certs/certs.zip ]; then echo "Creating certs"; echo -ne \ "instances:\n"\ " - name: es-node1\n"\ " dns:\n"\ " - es-node1\n"\ " - localhost\n"\ " ip:\n"\ " - 127.0.0.1\n"\ " - name: es-node2\n"\ " dns:\n"\ " - es-node2\n"\ " - localhost\n"\ " ip:\n"\ " - 127.0.0.1\n"\ " - name: es-node3\n"\ " dns:\n"\ " - es-node3\n"\ " - localhost\n"\ " ip:\n"\ " - 127.0.0.1\n"\ > config/certs/instances.yml; bin/elasticsearch-certutil cert --silent --pem -out config/certs/certs.zip --in config/certs/instances.yml --ca-cert config/certs/ca/ca.crt --ca-key config/certs/ca/ca.key; unzip config/certs/certs.zip -d config/certs; fi; echo "Setting file permissions"; chown -R root:root config/certs; find . -type d -exec chmod 750 \{\} \;; find . -type f -exec chmod 640 \{\} \;; echo "Waiting for Elasticsearch availability"; until curl -s --cacert config/certs/ca/ca.crt https://es-node1:9200 | grep -q "missing authentication credentials"; do sleep 30; done; echo "Setting kibana_system password"; until curl -s -X POST --cacert config/certs/ca/ca.crt -u "elastic:${ELASTIC_PASSWORD}" -H "Content-Type: application/json" https://es-node1:9200/_security/user/kibana_system/_password -d "{\"password\":\"${KIBANA_PASSWORD}\"}" | grep -q "^{}"; do sleep 10; done; echo "All done!"; ' healthcheck: test: ["CMD-", "[ -f config/certs/es-node1/es-node1.crt ]"] interval: 1s timeout: 5s retries: 120 es-node1: depends_on: es-setup: condition: service_healthy image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION} container_name: es-node1 restart: always networks: net: ipv4_address: 172.20.100.11 volumes: - /data/elk-certs:/usr/share/elasticsearch/config/certs - /data/es-node1:/usr/share/elasticsearch/data ports: - ${ES_PORT}:9200 environment: - node.name=es-node1 - cluster.name=${CLUSTER_NAME} - cluster.initial_master_nodes=es-node1,es-node2,es-node3 - discovery.seed_hosts=es-node2,es-node3 - ELASTIC_PASSWORD=${ELASTIC_PASSWORD} - bootstrap.memory_lock=true - xpack.security.enabled=true - xpack.security.http.ssl.enabled=true - xpack.security.http.ssl.key=certs/es-node1/es-node1.key - xpack.security.http.ssl.certificate=certs/es-node1/es-node1.crt - xpack.security.http.ssl.certificate_authorities=certs/ca/ca.crt - xpack.security.transport.ssl.enabled=true - xpack.security.transport.ssl.key=certs/es-node1/es-node1.key - xpack.security.transport.ssl.certificate=certs/es-node1/es-node1.crt - xpack.security.transport.ssl.certificate_authorities=certs/ca/ca.crt - xpack.security.transport.ssl.verification_mode=certificate - xpack.license.self_generated.type=${LICENSE} mem_limit: ${MEM_LIMIT} ulimits: memlock: soft: -1 hard: -1 healthcheck: test: [ "CMD-", "curl -s --cacert config/certs/ca/ca.crt https://localhost:9200 | grep -q 'missing authentication credentials'", ] interval: 10s timeout: 10s retries: 120 extra_hosts: - "es-node1:172.20.100.11" - "es-node2:172.20.100.12" - "es-node3:172.20.100.13" es-node2: depends_on: - es-node1 image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION} container_name: es-node2 restart: always networks: net: ipv4_address: 172.20.100.12 volumes: - /data/elk-certs:/usr/share/elasticsearch/config/certs - /data/es-node2:/usr/share/elasticsearch/data environment: - node.name=es-node2 - cluster.name=${CLUSTER_NAME} - cluster.initial_master_nodes=es-node1,es-node2,es-node3 - discovery.seed_hosts=es-node1,es-node3 - bootstrap.memory_lock=true - xpack.security.enabled=true - xpack.security.http.ssl.enabled=true - xpack.security.http.ssl.key=certs/es-node2/es-node2.key - xpack.security.http.ssl.certificate=certs/es-node2/es-node2.crt - xpack.security.http.ssl.certificate_authorities=certs/ca/ca.crt - xpack.security.transport.ssl.enabled=true - xpack.security.transport.ssl.key=certs/es-node2/es-node2.key - xpack.security.transport.ssl.certificate=certs/es-node2/es-node2.crt - xpack.security.transport.ssl.certificate_authorities=certs/ca/ca.crt - xpack.security.transport.ssl.verification_mode=certificate - xpack.license.self_generated.type=${LICENSE} mem_limit: ${MEM_LIMIT} ulimits: memlock: soft: -1 hard: -1 healthcheck: test: [ "CMD-", "curl -s --cacert config/certs/ca/ca.crt https://localhost:9200 | grep -q 'missing authentication credentials'", ] interval: 10s timeout: 10s retries: 120 extra_hosts: - "es-node1:172.20.100.11" - "es-node2:172.20.100.12" - "es-node3:172.20.100.13" es-node3: depends_on: - es-node2 image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION} container_name: es-node3 restart: always networks: net: ipv4_address: 172.20.100.13 volumes: - /data/elk-certs:/usr/share/elasticsearch/config/certs - /data/es-node3:/usr/share/elasticsearch/data environment: - node.name=es-node3 - cluster.name=${CLUSTER_NAME} - cluster.initial_master_nodes=es-node1,es-node2,es-node3 - discovery.seed_hosts=es-node1,es-node2 - bootstrap.memory_lock=true - xpack.security.enabled=true - xpack.security.http.ssl.enabled=true - xpack.security.http.ssl.key=certs/es-node3/es-node3.key - xpack.security.http.ssl.certificate=certs/es-node3/es-node3.crt - xpack.security.http.ssl.certificate_authorities=certs/ca/ca.crt - xpack.security.transport.ssl.enabled=true - xpack.security.transport.ssl.key=certs/es-node3/es-node3.key - xpack.security.transport.ssl.certificate=certs/es-node3/es-node3.crt - xpack.security.transport.ssl.certificate_authorities=certs/ca/ca.crt - xpack.security.transport.ssl.verification_mode=certificate - xpack.license.self_generated.type=${LICENSE} mem_limit: ${MEM_LIMIT} ulimits: memlock: soft: -1 hard: -1 healthcheck: test: [ "CMD-", "curl -s --cacert config/certs/ca/ca.crt https://localhost:9200 | grep -q 'missing authentication credentials'", ] interval: 10s timeout: 10s retries: 120 extra_hosts: - "es-node1:172.20.100.11" - "es-node2:172.20.100.12" - "es-node3:172.20.100.13" kibana: depends_on: es-node1: condition: service_healthy es-node2: condition: service_healthy es-node3: condition: service_healthy image: docker.elastic.co/kibana/kibana:${STACK_VERSION} container_name: kibana restart: always networks: net: ipv4_address: 172.20.100.14 volumes: - /data/elk-certs:/usr/share/kibana/config/certs - /data/kibana:/usr/share/kibana/data ports: - ${KIBANA_PORT}:5601 environment: - SERVERNAME=kibana - ELASTICSEARCH_HOSTS=https://es-node1:9200 - ELASTICSEARCH_USERNAME=kibana_system - ELASTICSEARCH_PASSWORD=${KIBANA_PASSWORD} - ELASTICSEARCH_SSL_CERTIFICATEAUTHORITIES=config/certs/ca/ca.crt mem_limit: ${MEM_LIMIT} healthcheck: test: [ "CMD-", "curl -s -I http://localhost:5601 | grep -q 'HTTP/1.1 302 Found'", ] interval: 10s timeout: 10s retries: 120 logstash: image: docker.elastic.co/logstash/logstash:${STACK_VERSION} container_name: logstash volumes: - /data/elk-certs:/usr/share/logstash/config/certs - /data/logstash/pipeline:/usr/share/logstash/pipeline - /data/logstash/config/logstash.yml:/usr/share/logstash/config/logstash.yml user: "0" restart: always ports: - 5044:5044 networks: net: ipv4_address: 172.20.100.15 extra_hosts: - "es-node1:172.20.100.11" - "es-node2:172.20.100.12" - "es-node3:172.20.100.13" networks: net: driver: bridge ipam: config: - subnet: 172.20.100.0/24 **************************elk.yml**************************

执行命令,创建容器

1 docker compose -f /data/script/elk.yml up -d

[+] Running 42/16

[+] Running 7/7

容器创建完成后,查看各容器状态是否正常

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5、修改kibana的配置文件,将其语言转化为中文。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 docker cp kibana:/usr/share/kibana/config/kibana.yml . vi kibana.yml **************************kibana.yml************************** # # ** THIS IS AN AUTO-GENERATED FILE ** # # Default Kibana configuration for docker target server.host: "0.0.0.0" server.shutdownTimeout: "5s" elasticsearch.hosts: [ "http://elasticsearch:9200" ] monitoring.ui.container.elasticsearch.enabled: true i18n.locale: "zh-CN" server.publicBaseUrl: "http://localhost:5601/" **************************kibana.yml************************** docker cp kibana.yml kibana:/usr/share/kibana/config/kibana.yml docker restart kibana

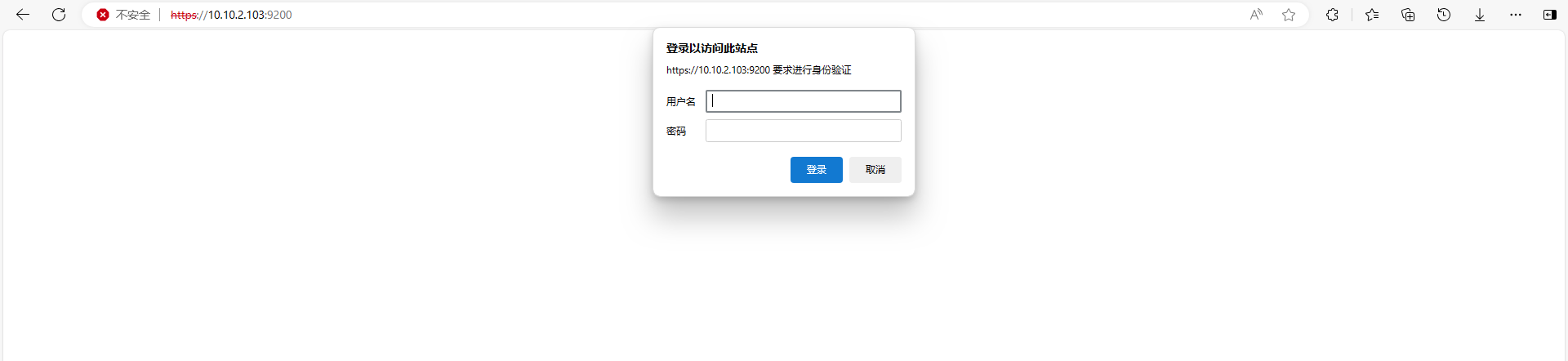

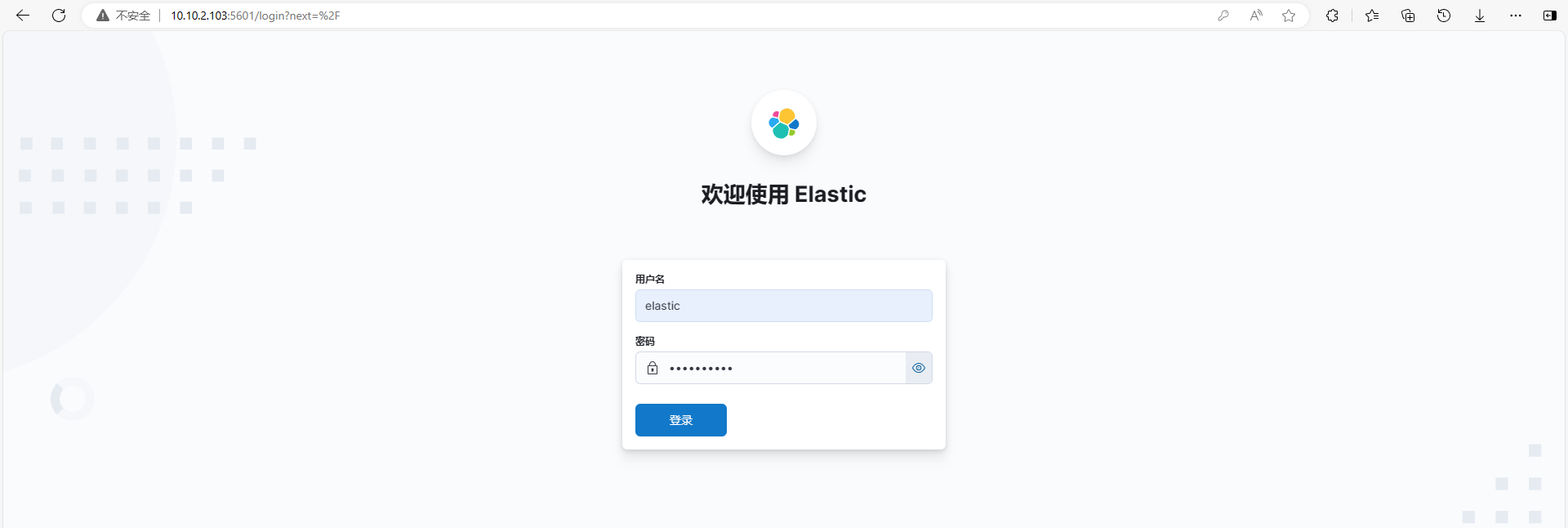

6、在浏览器中分别访问https://10.10.2.103:9200/和http://10.10.2.103:5601/,输入账户和密码,进行查看,如下图所示。

推送Linux日志,验证部署 1、安装Filebeat日志采集器

(1)通过rpm方式安装Filebeat日志采集器。

1 2 3 4 #下载rpm包 curl -L -O https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-8.13.3-x86_64.rpm #执行安装 rpm -vi filebeat-8.13.3-x86_64.rpm

(2)配置Filebeat

1 2 3 4 5 6 #启动Filebeat systemctl start filebeat #查看Filebeat运行状态 systemctl status filebeat #设置Filebeat为开机自启 systemctl enable filebeat

2、配置Filebeat收集Linux日志

(1)配置filebeat文件,将日志发送到 Logstash 服务。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 # 修改filebeat配置文件 mv /etc/filebeat/filebeat.yml /etc/filebeat/filebeat.yml.bak # 新建filebeat配置文件 vi /etc/filebeat/filebeat.yml **************************filebeat-dns.yml************************** # 配置文件输入,监视日志文件 filebeat.inputs: - type: log enabled: true # 指定 DNS 日志文件路径 paths: - /var/log/messages* # 标识日志类型为elk-linux fields: type: elk-linux # 将额外的字段添加到根级别 fields_under_root: true # 配置输出到 Logstash # 指定 Logstash 服务的地址和端口 output.logstash: hosts: ["10.10.2.103:5044"] **************************filebeat-dns.yml**************************

(2)重启filebeat服务,验证配置是否生效

1 2 3 4 5 6 # 重启filebeat systemctl restart filebeat # 删除锁文件后,重新启动 Filebeat 服务 rm -rf /var/lib/filebeat/filebeat.lock # 运行并查看 Filebeat 的运行日志 filebeat -e -c /etc/filebeat/filebeat.yml

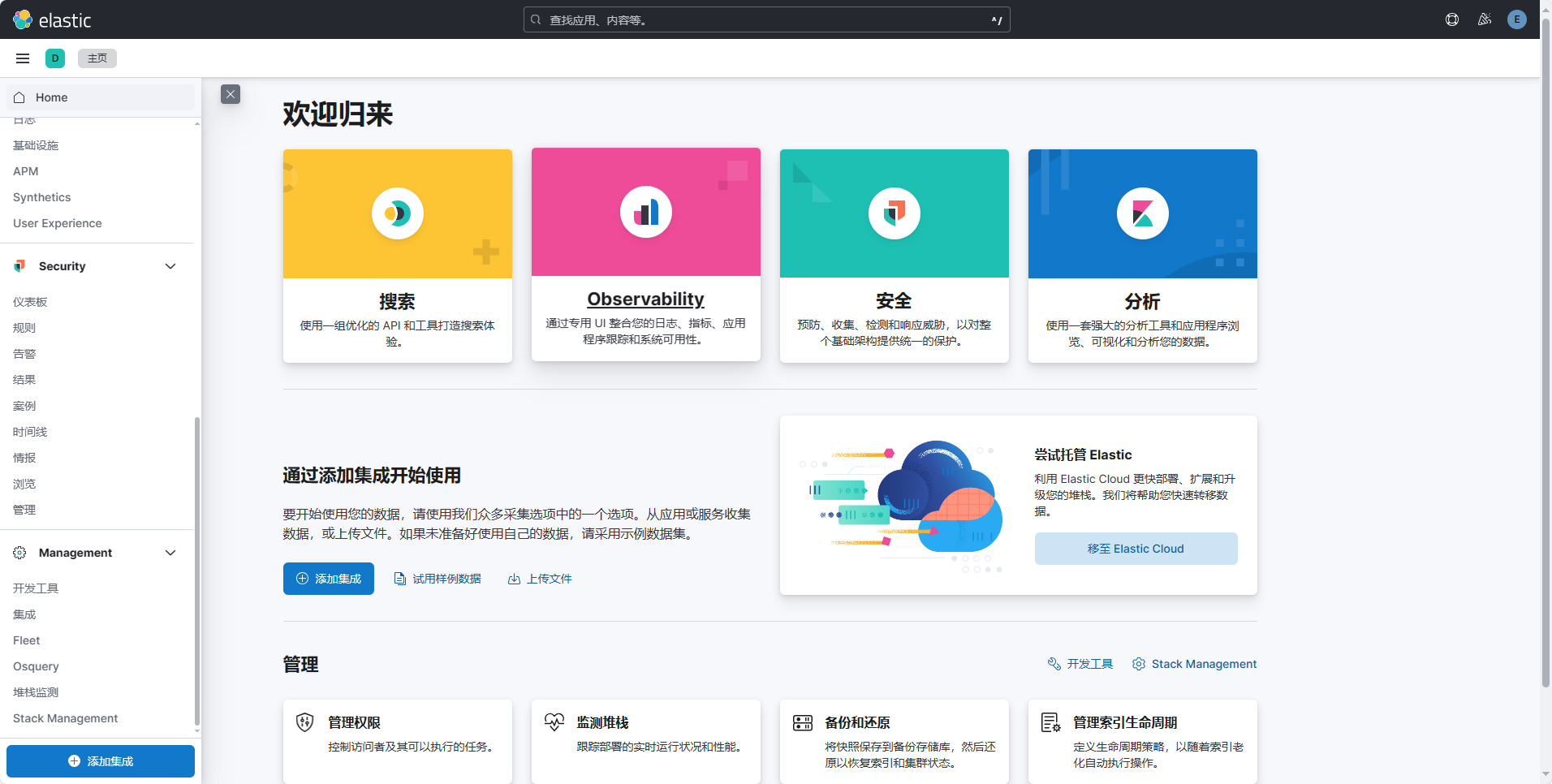

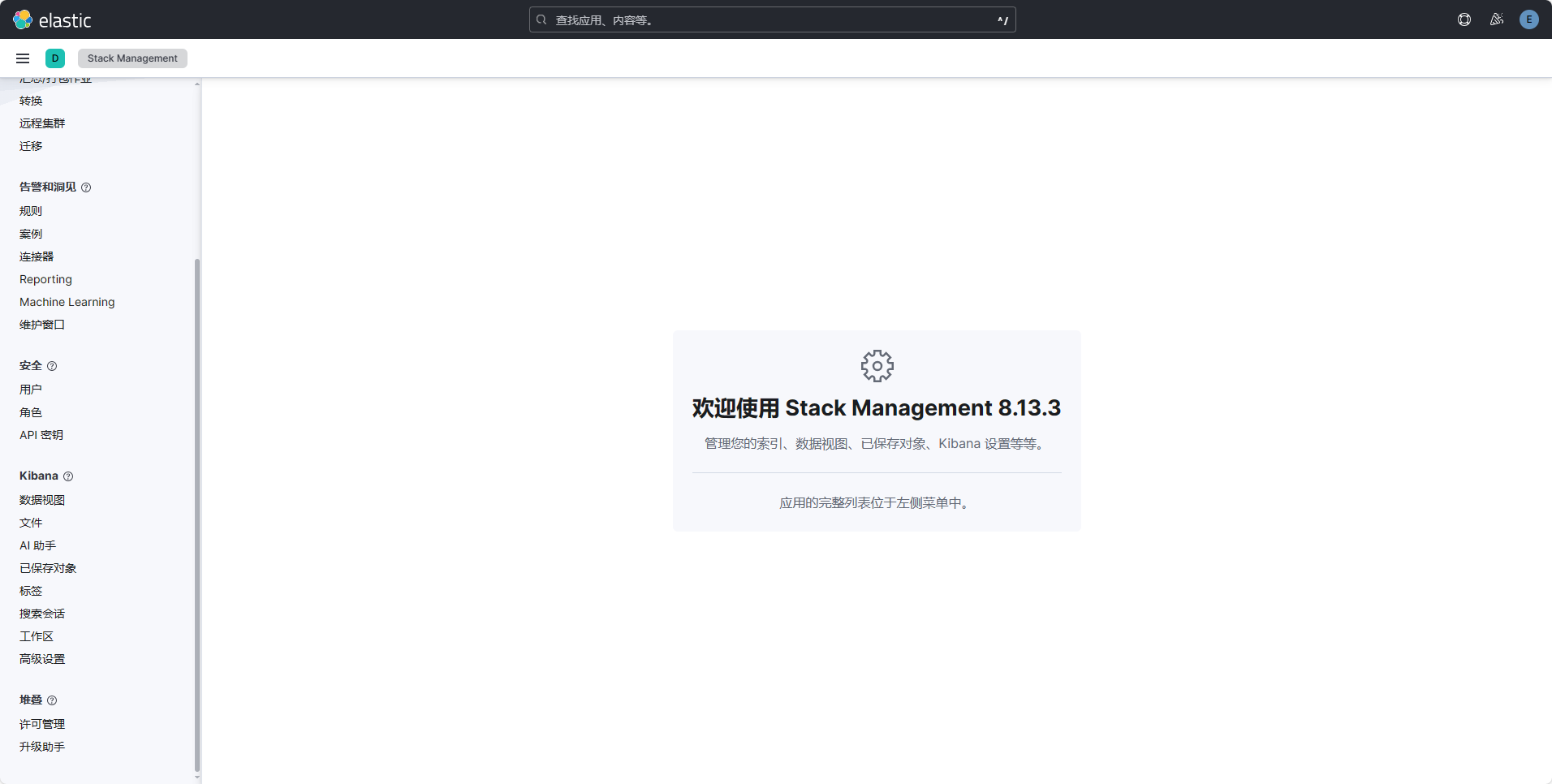

3、在浏览器中登录Kibana,点击左侧菜单中的 “Stack Management”,管理创建数据视图。如下图所示。

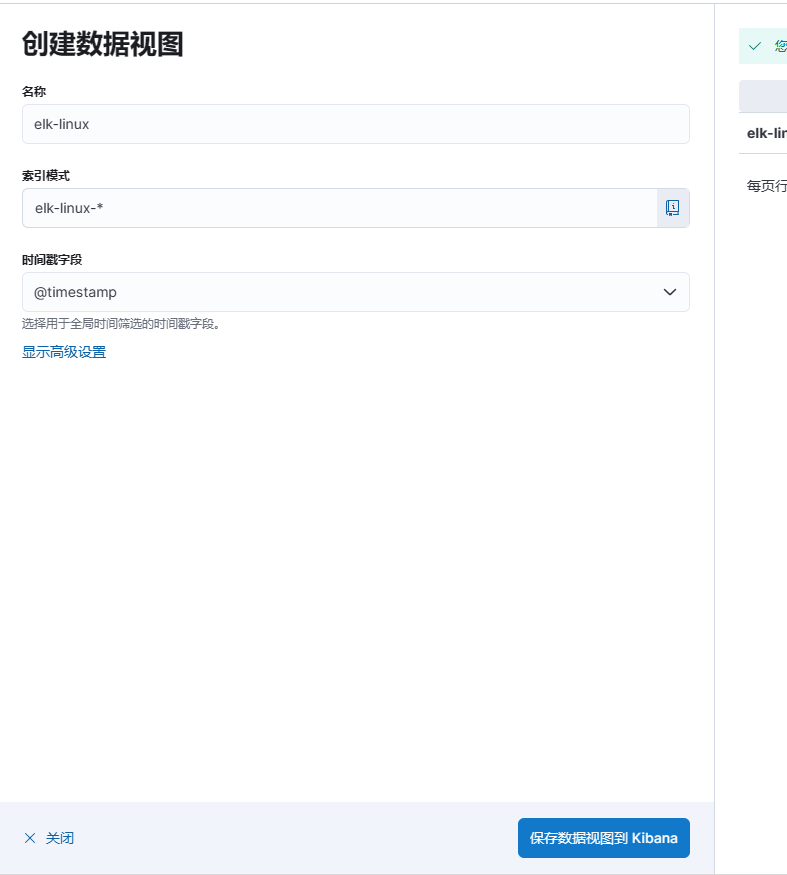

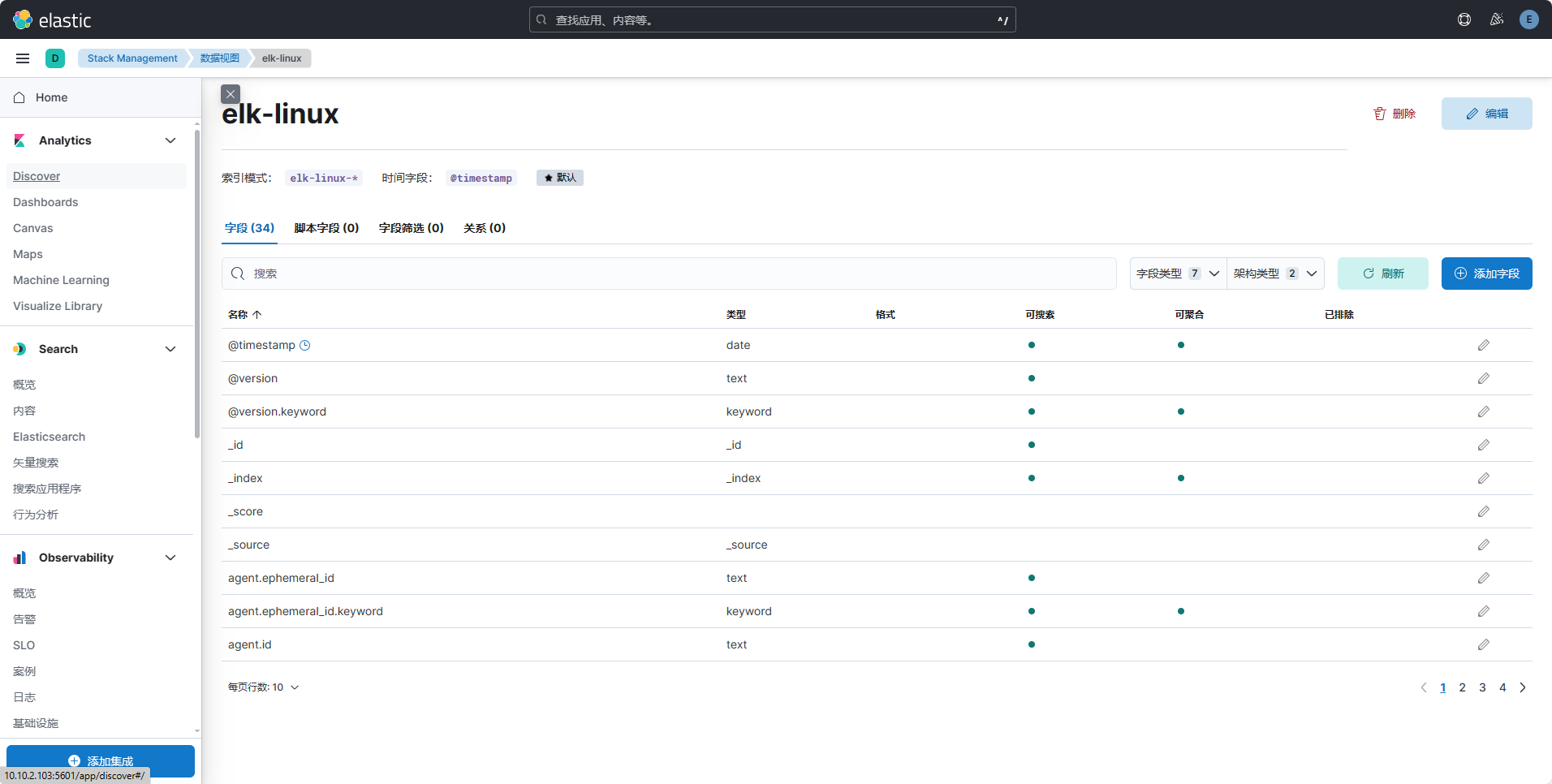

4、在“Stack Management”界面中,点击“数据视图”,选择索引模式,创建“elk-linux”视图。如下图所示。

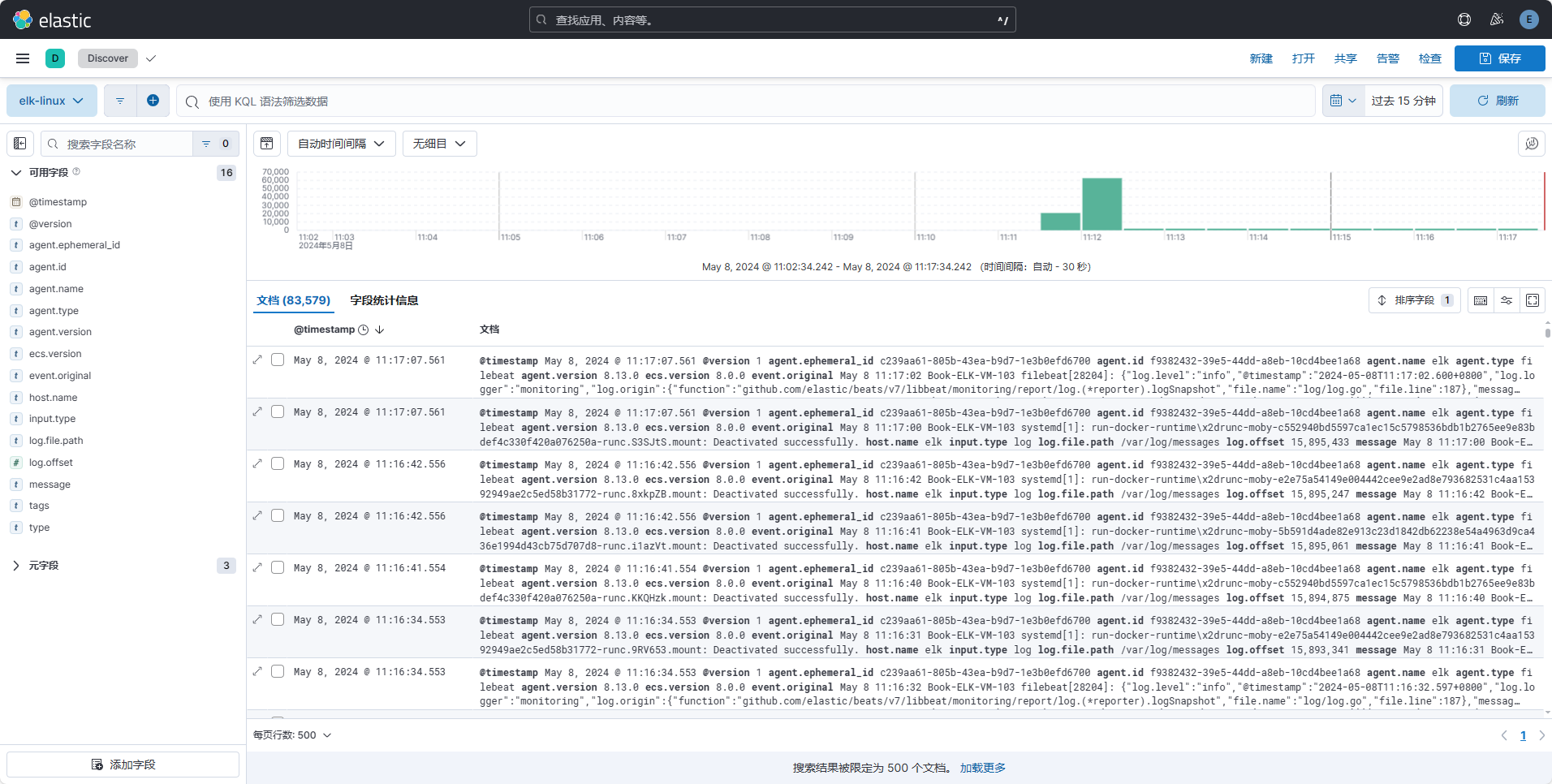

5、数据视图创建完成后,选择左侧菜单中的“Discover”,查看Linux日志详细内容。如下图所示。由此,elk集群搭建完成。